Welcome to my blog! This is Part 1 of a two-part series about building this site. Part 2 has more details on the Rust server code.

It might’ve been a little silly, but I made this site “from scratch” in Rust instead of using a static site generator like Jekyll, Hugo, or Zola. It’s not that I had any big requirements that those tools couldn’t meet. On the contrary, this site is basically a less-polished version of what comes out of a static site generator —blog posts are written in Markdown, then rendered to HTML.

Sure, I could have just done this with Jekyll running on Github Pages, but this way is more fun!

Saying I made the site “from scratch” is not really accurate, because I just wrote a little glue code between some well-known Rust crates:

- Axum for the backend framework

- Tera for HTML templating

- toml for parsing TOML headers in the markdown files

- pulldown_cmark for markdown → html rendering.

Still, it was a good chance to learn a little bit of web stuff, and hopefully by documenting it here I’ll remember more of it.

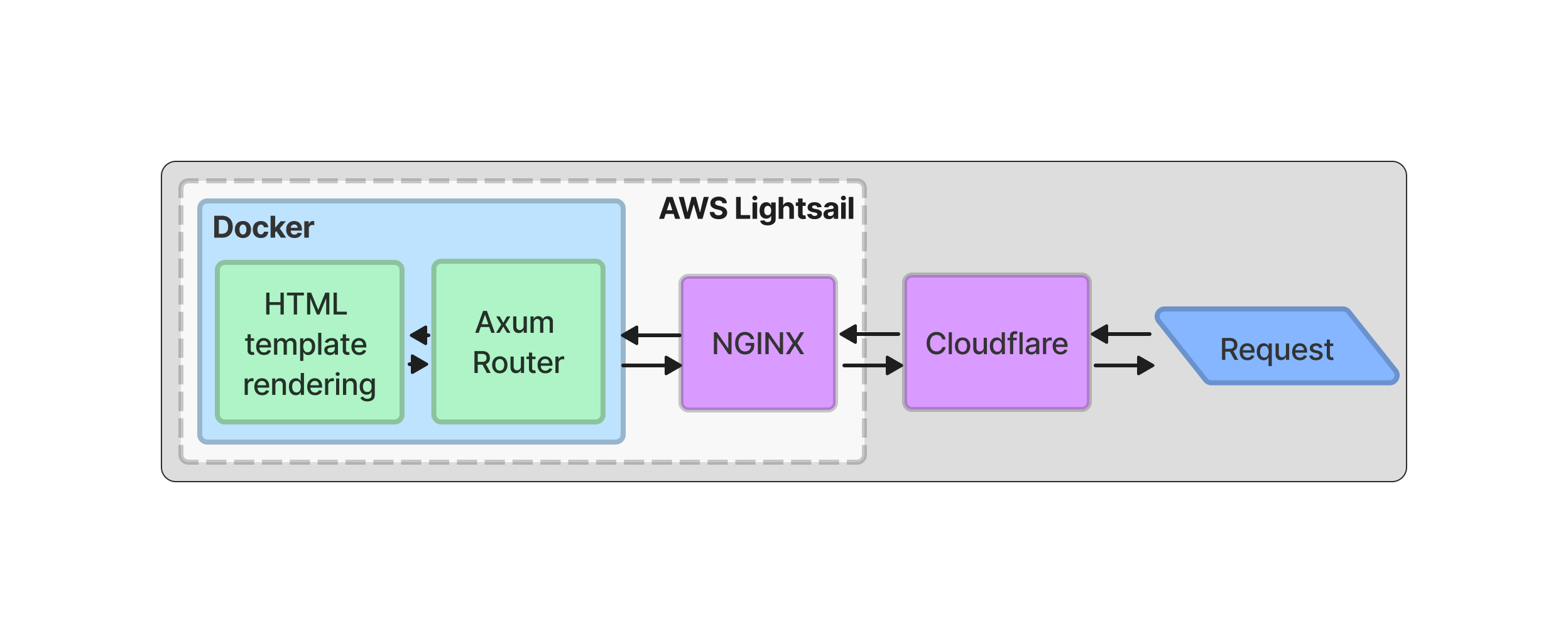

The app is running inside of a Docker container on a AWS Lightsail virtual private server (VPS). The container is behind Nginx for HTTPS support, and the whole thing is behind Cloudflare (free-tier). Here’s a sketch:

All in all, it took about a week of working on this (on and off) to go from an empty project to the site running on the internet. There’s still plenty of places where the code can be improved, features added, etc. I’m sure I’ll find many more of them as I write more posts.

In the next few sections, I’ll walk through some of the steps it took for me to get the site online. But first, some motivation…

Table of Contents

- Motivation: why make a personal site

- Overview

- A tiny taste of Docker

- Hosting the app: AWS Lightsail

- It works!

- Continuous deployment (or: Github Actions sadness)

- Conclusion

- Footnotes

Motivation: why make a personal site

Since this is my first post, here comes the obligatory “why this blog exists” section. (If you don’t care, feel free to skip ahead to the Overview.) There are three main reasons:

1. Somewhere to document what I’m learning

This is mainly for the benefit of future me: organizing links to important resources, recording how design decisions turned out (good and bad), and having a place to fully flesh out some ideas. This site being public will force me to be a little more organized and polished here than in my personal notes.

2. Somewhere to practice technical writing

After 6 years in grad school, I feel like I became decent at technical writing about physics. Coming to software, some of the basic skills carry over, but I know it’ll take practice to get to where I want to be.

I think an essential part of this will be strengthening my foundational CS knowledge. I learned in grad school that it’s really hard to carve out a clear and simple explanation from a complicated story. Doing so required actually understanding the basics and, equally importantly, having confidence in my knowledge. Hopefully, writing here will help me dive deeper into the fundamentals and gain that confidence in a new field.

3. Somewhere to put myself out there

People who know me well know that I’m an introvert. I don’t really enjoy writing about or talking about myself.

Maybe this blog will eventually be useful to others, maybe it won’t, but I’ve certainly learned a ton from reading other peoples’ blogs. Hopefully, as I learn and write more, this can grow into something that someone else will want to read. But it definitely won’t if I don’t share it with anyone!

Okay, with that all out of the way, let’s talk about the site.

Overview

Going beyond the sketch, here are a few more details about the site design:

-

Backend: Written in Rust using axum.

-

Blog posts are written in Markdown, rendered to HTML with pulldown_cmark, and then injected along with some post metadata into HTML templates using tera.

-

Pages are just HTML and CSS. I used Bulma and the bulma-prefers-dark extension for styling. The only JavaScript on this page is code highlighting using highlight.js.

-

This isn’t a totally static website, even though it looks like one. HTML templates are rendered on each request (minus any caching done by Nginx / Cloudflare). Currently, this is a bit wasteful, but maybe later I can do something fun with this.

-

There’s no database yet, despite the site not being static. Currently, the Markdown files are just read in when the app is initialized and their contents are stored on the heap in a simple

Vec. (Yes, this means that the website breaks for a few seconds when I add a post.) For learning purposes, I’d like to eventually add a database connection (maybe using SQLx?) but haven’t gotten around to it yet. -

The Rust app is built and bundled with templates and static assets in a container using Docker, and deployed to an AWS Lightsail instance. The app runs behind Nginx, which serves as a basic reverse proxy and handles HTTPS support. Building the container, pushing it to DockerHub, and deploying it to my AWS instance is automated in Github Actions.

For the remainder of this post, I’m going focus on the deployment of the app. I actually found this process to be a little more interesting than writing the server-side Rust code, since I got to learn a little about Docker, AWS, Cloudflare, and Nginx. From now on, we’ll just assume that we have the app working as described by the bullet points above. If you want to read more about the implementation, check out Part 2.

A tiny taste of Docker 🐋

I hadn’t really done any deployment of code to a remote server before this project, so I had no idea how to go about doing this initially.

After doing some Googling, I decided to just build the app in a container with the static assets (Markdown, HTML templates, a tiny bit of CSS) and push it up to a private DockerHub repo. Then I could easily pull and run the container by SSH-ing into the server.

I also considered just SFTP-ing the binary and assets over to the server. I’m guessing that this wouldn’t be hard to get working, especially because I don’t have to worry about cross-compiling or anything (I’m running Linux locally). But I figured in the long run it would be a little bit simpler using Docker. Plus, I’d never used Docker before so I figured it was time to learn a little bit.

I followed a nice blog post from LogRocket

for building a Rust app inside of Docker.

Here’s the first part of my Dockerfile, which builds the binary:

# First part of Dockerfile

FROM rust:1.67 as builder

# New cargo project

RUN USER=root cargo new --bin site

WORKDIR ./site

# Copy manifests

COPY ./Cargo.lock ./Cargo.lock

COPY ./Cargo.toml ./Cargo.toml

# Cache dependencies

RUN cargo build --release && rm src/*.rs

ADD . ./

RUN rm ./target/release/deps/site*

RUN cargo build --release

As explained in the LogRocket post, this build consists of two stages.

The first stage starts a new Cargo project in the container and copies over

the Cargo.lock and Cargo.toml files to declare the project’s dependencies.

We then build the project in release mode, without having copied over our actual src/ directory.

This caches the dependencies so they don’t have to be built from scratch every time the container is built, only when we add or remove a dependency 1.

The second stage then copies our actual src/ directory to the container and builds the actual final binary.

The second part of the Dockerfile uses a Debian base image.

This is where the app will run.

Here, we copy the binary from the build stage over the Debian container, along with the assets needed to render pages.

And finally, we provide a CMD to tell the container how to run the app.

# Second half of Dockerfile

FROM debian:bullseye-slim

ARG APP=/usr/src/app

RUN apt-get update \

&& apt-get install -y tzdata \

&& rm -rf /var/lib/apt/lists/*

EXPOSE 8000

ENV TZ=Etc/UTC \

APP_USER=appuser

RUN groupadd $APP_USER \

&& useradd -g $APP_USER $APP_USER \

&& mkdir -p ${APP}

COPY --from=builder /site/target/release/site \

${APP}/site

COPY ./assets ${APP}/assets

COPY ./markdown ${APP}/markdown

COPY ./templates ${APP}/templates

RUN chown -R $APP_USER:$APP_USER ${APP}

USER $APP_USER

WORKDIR ${APP}

CMD ["./site"]

After doing this, I could run the app locally without any trouble with

docker run -p 8000:8000 $CONTAINER_NAME

Hosting the app: AWS Lightsail

I decided to choose from one of AWS, Google Cloud, and Azure for cloud hosting. I figured it would be good to get (semi-) acquainted with at least one of the major providers.

As a total beginner, I found AWS’s site to be the most comprehensible. I was not looking to spend a lot of money, and AWS Lightsail’s cheapest option seemed doable at $3.50/month (with the first 3 months free). This was for a virtual private server (VPS) with 512 MB RAM and 1 vCPU. It was pretty quick to sign up for a free-tier AWS account and get myself an Ubuntu instance.

Lightsail also has a container service, but it’s more expensive at $7/month (or more). Since I only plan to run one instance initially, I figured the container service was not necessary.

It works!

After SSH-ing into the server, installing Docker, and pulling and running my container, I could navigate to the server’s IP address on my browser locally to get the first glimpse of my site on the Real Internet. Yay!

I got a free Cloudflare account for fun and bought my domain through them for $9/year. Through Cloudflare’s free services, I also get caching, CDN, and analytics, which is pretty awesome!

At this point, my domain was working, but I still had to get encryption going. Actually, as soon as I started using Cloudflare, Firefox stopped thinking my site was sketchy. However, I hadn’t yet done anything to set up SSL on the server, so only the traffic between the browser and Cloudflare was encrypted and not the traffic from Cloudflare to my server.

I decided to use Cloudflare’s Origin CA to generate certs. Instead of trying to set up TLS in my axum server (though the axum repo has a nice example), I decided to just put my app behind Nginx. Having never used Nginx before, I found these two pages super helpful:

Maybe using Nginx for this is a bit overkill, but at least now I sort of know how to configure it.

Somewhere in there, I also wasted time trying to figure out why I couldn’t see the server over IPv6 (I thought I’d messed up the Nginx config), before realizing that I just don’t have IPv6 connectivity here at home 😞

Continuous deployment (or: Github Actions sadness)

At this point, there was just one more thing missing. To update the site, I had to build the container locally and push it to my DockerHub repo, then manually SSH into the server and pull and run the new Docker image. I figured this procedure would quickly get annoying so I gave a shot at automating it.

I had initially set up CI through Github Actions to run my tests on each push to main,

so I decided to extend this to automatically deploy the app.

Following this article from the Github docs,

I was able to build the Docker image and push it to my Docker repo on each run.

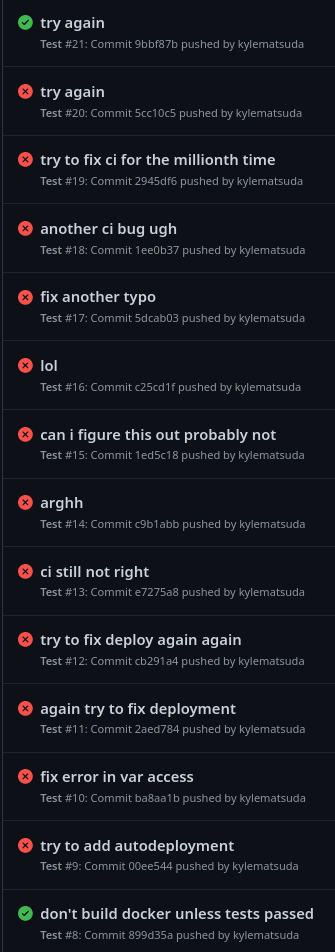

The last piece was adding a shell script to SSH into my server, pull the updated container, and run it. This took an unfortunate number of tries to get right. Here’s a screenshot from my Github Actions workflows pane:

Lol.

The script itself was not very complicated, basically following this blog post on automating Docker deployments. All it did was SSH into the server, log in to Docker, pull the newest version of the container, stop the old container, and run the new one. Most of my failed runs were due to issues with my SSH commands. After I finally got it working, I found this blog post on using SSH in Github Actions and ended up migrating things over to the pre-made SSH action used in that post (appleboy/ssh-action).

Conclusion

If you made it here, thanks for reading to the end of my first blog post!

So far, I’m really glad that I built this site on my own. This was a fun way to gain a bit more familiarity with some common tools and services like Docker, Nginx, Cloudflare, and AWS. Since my use cases were pretty basic, there were a lot of great resources readily available that made it easy to get started. Using Github Pages would have had me writing posts much quicker, but I wouldn’t have gotten to learn about all this other stuff!

If you’re interested, please check out part 2 for more details on the backend code.

Footnotes

As a Docker newbie, I was confused about this initially.

Each command like RUN creates an intermediate image that’s basically memoized,

so Docker only re-runs an image if its inputs changed.